Digital Image Processing 1st Module

What is Digital image processing?

Digital Image Processing means processing digital image by means of a digital computer. We can also say that it is a use of computer algorithms, in order to get enhanced image either to extract some useful information.

Unlike humans, who are limited to the visual band of the electromagnetic (EM) spectrum, imaging machines cover almost the entire EM spectrum, ranging from gamma to radio waves. They can operate also on images generated by sources that humans are not accustomed to associating with images.

These include ultrasound, electron microscopy and computer generated images. These include ultrasound, electron microscopy and computer generated images. Thus DIP encompases a wide and varied field of applications.

There are 3 type of computerized processes: Low-mid-high. Low level processes involve primitive operations such as reduce noise, contrast enhancement and image sharpening. Here both the input and output are images.

Mid processes on images involve tasks such segmenetation (partitioning images into regions or objects), description of those objects to reduce them to a form suitable for computer processing and classification of individual objects. Here input is image but output is the attributes extracted from the images.

High level involves "making sense " of an ensemble of recognized objects, as in image analysis, performing cognitive functions normally associated with human vision.

Image processing mainly include the following steps:

1.Importing the image via image acquisition tools;

2.Analysing and manipulating the image;

3.Output in which result can be altered image or a report which is based on analysing that image.

What is an image?

An image is defined as a two-dimensional function,F(x,y), where x and y are spatial coordinates, and the amplitude of F at any pair of coordinates (x,y) is called the intensity of that image at that point. When x,y, and amplitude values of F are finite, we call it a digital image.

In other words, an image can be defined by a two-dimensional array specifically arranged in rows and columns.

Digital Image is composed of a finite number of elements, each of which elements have a particular value at a particular location.These elements are referred to as picture elements,image elements,and pixels.A Pixel is most widely used to denote the elements of a Digital Image.

Types of an image

- BINARY IMAGE– The binary image as its name suggests, contain only two pixel elements i.e 0 & 1,where 0 refers to black and 1 refers to white. This image is also known as Monochrome.

- BLACK AND WHITE IMAGE– The image which consist of only black and white color is called BLACK AND WHITE IMAGE.

- 8 bit COLOR FORMAT– It is the most famous image format.It has 256 different shades of colors in it and commonly known as Grayscale Image. In this format, 0 stands for Black, and 255 stands for white, and 127 stands for gray.

- 16 bit COLOR FORMAT– It is a color image format. It has 65,536 different colors in it.It is also known as High Color Format. In this format the distribution of color is not as same as Grayscale image.

A 16 bit format is actually divided into three further formats which are Red, Green and Blue. That famous RGB format.

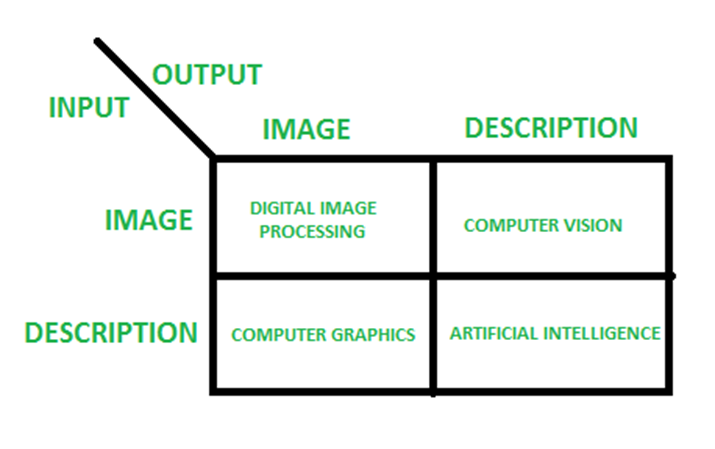

OVERLAPPING FIELDS WITH IMAGE PROCESSING

According to block 1,if input is an image and we get out image as a output, then it is termed as Digital Image Processing.

According to block 2,if input is an image and we get some kind of information or description as a output, then it is termed as Computer Vision.

According to block 3,if input is some description or code and we get image as an output, then it is termed as Computer Graphics.

According to block 4,if input is description or some keywords or some code and we get description or some keywords as a output,then it is termed as Artificial Intelligence

Examples of fields that use digital image processing

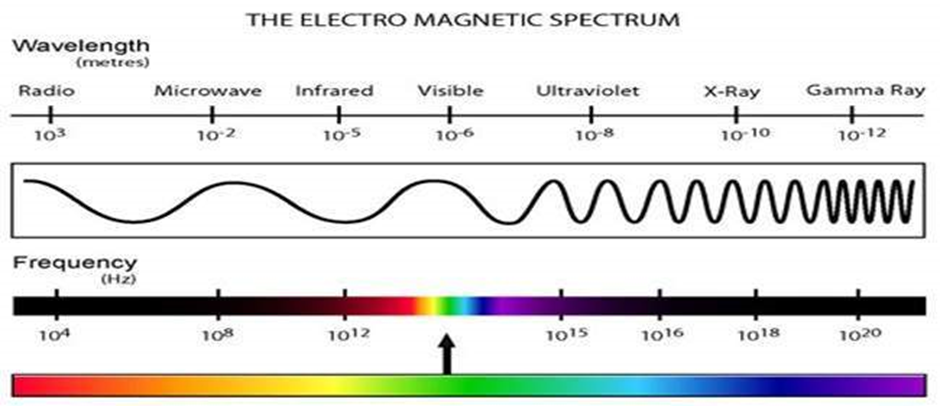

One of the simplest ways to develop a basic understanding of the extent of image processing applications is to categories images according to their source (e.g., visual, x-ray and son on). The principle energy source for images in use today is the electromagnetic energy spectrum. Other important sources of energy include acoustic, ultrasonic and electronic (in the form of electron beams used in electron microscopy). Synthetic images used for modeling and visualization are generated by computer.

Electromagnetic waves can be conceptualized as propagating sinusoidal waves of varying wavelengths, or they can be thought of as a stream of mass less particles, each traveling in a wavelike pattern and moving at the speed of light. Each mass less particle contain a certain amount (bundle ) of energy. Each bundle of energy is called a photon. If spectral bands are grouped according to energy per photon, shown in fig we obtain the spectrum ranging from gamma rays (highest energy) at the one end to radio waves (lowest energy) at the other.

Gammaray imaging

Major uses of imaging based on gamma rays include nuclear medicine and astronomical observations. In nuclear medicine, the approach is to inject a patient with a radioactive isotope that emits gamma rays as it decays. Images are produced from emissions collected by gamma ray detectors.

Positron emission tomography(PET)

The patient is given a radioactive isotope that emits positrons as it decays. When a positron meets a electron, both are annihilated and two gamma rays are given off. These are detected and a tomographic image is created using the basic principles of tomography.

X-ray Imaging (oldest source of EM radiation)

X-rays for medical and industrial imaging are generated using an x-ray tube, which is a vacuum tube with a cathode and anode. The cathode is heated, causing free electrons to be released. These electrons flow at high speed to the positively charged anode. When the electron strike a nucleus, energy is released in the form x-ray radiation. The energy(penetrating power) of the x-rays is controlled by a current applied to the filament in the cathode.

Angiography is another major application in an area called contrast enhancement radiography. The procedure is used to obtain images of blodd vessels. A catheter ( a small flexible hollow tube) is inserted, for example into an artery of vein in the groin. The catheter is threaded into the blood vessel and guided to the area to be studied. When the catheter reaches the site under investigation, an x-ray contrast medium is injected through the catheter. This enhances contrast of the blood vessles and enables the radiologist to see any irregularities or blockages.

Imaging in the visible and infrared bands

Infrared band often is used in conjunction with visual imaging. The applications ranges from light microscopy, astronomy, remote sensing industry and law enforcement.

Eg:

Microscopy- the applications ranges from enhancement to measurement

Remote sensing-weather observation from multispectral images from satellites

Industry-check up the bottledrink with less quantity

Law enforcement – biometrics

Imaging in the microwave band

Dominant application in microwave band is radar. The unique feature of imaging radar is its ability to collect data over virtually any region at any time, regardless of weather or ambient lighting conditions. Some radar waves can penetrate clouds and under certain conditions can also see through vegetation, ice and extremely dry sand. In many cases, radar is the only way to explore inaccessible regions of the earth’s surface. An imaging radar works like a flash camera in that it provides it own illumination (microwaves pulses) to illuminate an area on the ground and take a snapshot image.

Imaging in the radio band

Major applications of imaging in the radio band are in medicine and astronomy. In medicine radio waves are used in magnetic resonance imaging (MRI). This techniques places a patient in a powerful magnet and passes radio waves through his or her body in short pulses. Each pulse causes a responding pulse of radio waves to be emitted by patient’s tissues. The location from which theses signals orginate and their strength are determined by a computer which produces a two-dimensional picture of a section of the patient.

Applications of Digital Image Processing

Some of the major fields in which digital image processing is widely used are mentioned below

· Image sharpening and restoration

· Medical field

· Remote sensing

· Transmission and encoding

· Machine/Robot vision

· Color processing

· Pattern recognition

· Video processing

· Microscopic Imaging

· Others

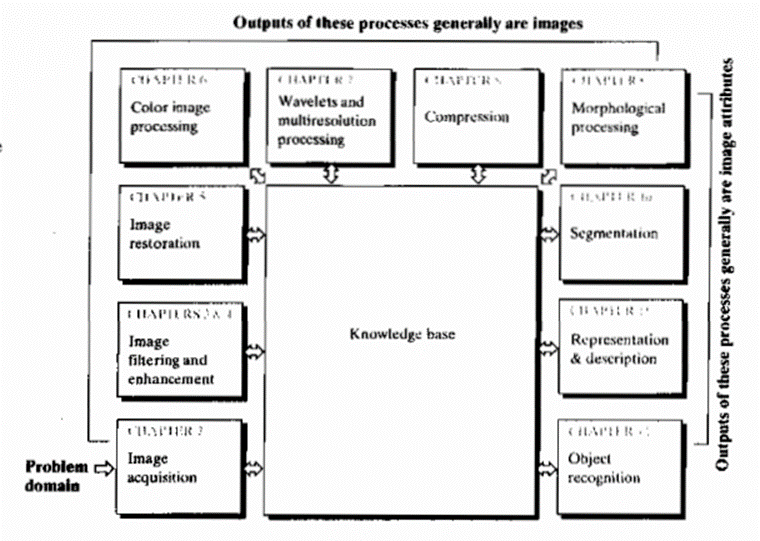

Fundamental steps in digital image processing (PHASES OF IMAGE PROCESSING):

1.ACQUISITION– It could be as simple as being given an image which is in digital form. The main work involves:

a) Scaling

b) Color conversion(RGB to Gray or vice-versa)

2.IMAGE ENHANCEMENT– It is amongst the simplest and most appealing in areas of Image Processing it is also used to extract some hidden details from an image and is subjective.

3.IMAGE RESTORATION– It also deals with appealing of an image but it is objective(Restoration is based on mathematical or probabilistic model or image degradation).

4.COLOR IMAGE PROCESSING– It deals with pseudocolor and full color image processing color models are applicable to digital image processing.

5.WAVELETS AND MULTI-RESOLUTION PROCESSING– It is foundation of representing images in various degrees.

6.IMAGE COMPRESSION-It involves in developing some functions to perform this operation. It mainly deals with image size or resolution.

7.MORPHOLOGICAL PROCESSING-It deals with tools for extracting image components that are useful in the representation & description of shape.

8.SEGMENTATION PROCEDURE-It includes partitioning an image into its constituent parts or objects. Autonomous segmentation is the most difficult task in Image Processing.

9.REPRESENTATION & DESCRIPTION-It follows the output of the segmentation stage, choosing a representation is only the part of the solution for transforming raw data into processed data.

10.OBJECT DETECTION AND RECOGNITION-It is a process that assigns a label to an object based on its descriptor.

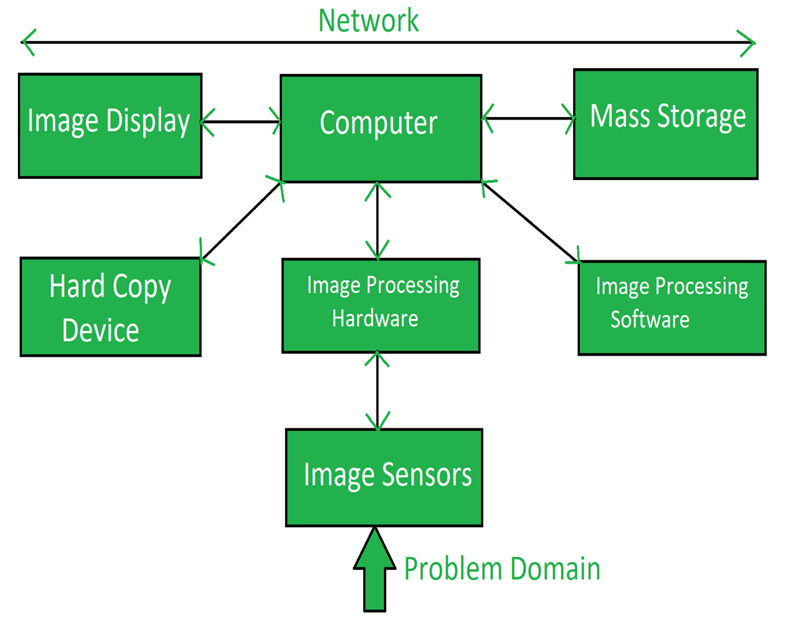

Components of Image Processing Systems

Image Processing System is the combination of the different elements involved in the digital image processing. Digital image processing is the processing of an image by means of a digital computer. Digital image processing uses different computer algorithms to perform image processing on the digital images.

It consists of following components:-

- Image Sensors:

Image sensors senses the intensity, amplitude, co-ordinates and other features of the images and passes the result to the image processing hardware. It includes the problem domain. - Image Processing Hardware:

Image processing hardware is the dedicated hardware that is used to process the instructions obtained from the image sensors. It passes the result to general purpose computer. - Computer:

Computer used in the image processing system is the general purpose computer that is used by us in our daily life or a supercomputer.

In dedicated applications, sometimes custom computers are used to achieve a required level of performance.

- Image Processing Software:

Software for image processing consists of specialized modules that perform specific tasks. A well designed package also includes the capability for the user to write code that, as a minimum, utilizes the specialized modules.

More sophisticated software packages allow the integration of those modules and general purpose software commands from at least one computer language.

Mass Storage:

This capability is a must in image processing applications. An image of size 1024 x1024 pixels in which the intensity of each pixel is an 8- bit quantity requires one megabytes of storage space if the image is not compressed .Image processing applications falls into three principal categories of storage

i) Short term storage for use during processing

ii) On line storage for relatively fast retrieval

iii) Archival storage such as magnetic tapes and disks

- Hard Copy Device:

The devices for recording image includes laser printers, film cameras, heat sensitive devices inkjet units and digital units such as optical and CD ROM disk. Films provide the highest possible resolution, but paper is the obvious medium of choice for written applications. - Image Display:

Image displays in use today are mainly color TV monitors. These monitors are driven by the outputs of image and graphics displays cards that are an integral part of computer system - Network:

It is almost a default function in any computer system in use today because of the large amount of data inherent in image processing applications. The key consideration in image transmission bandwidth.

Elements of Visual Perception

The field of digital image processing is built on the foundation of mathematical and probabilistic formulation, but human intuition and analysis play the main role to make the selection between various techniques, and the choice or selection is basically made on subjective, visual judgements.

In human visual perception, the eyes act as the sensor or camera, neurons act as the connecting cable and the brain acts as the processor.

The basic elements of visual perceptions are:

· Structure of Eye

· Image Formation in the Eye

· Brightness Adaptation and Discrimination

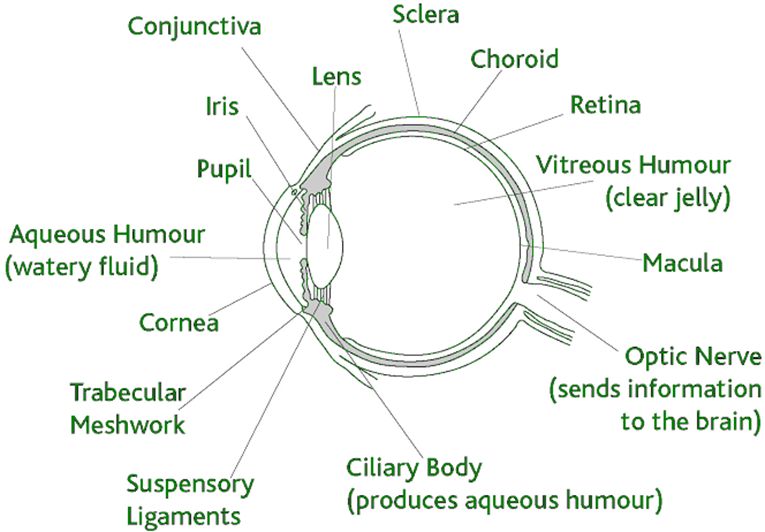

Structure of Eye:

The human eye is a slightly asymmetrical sphere with an average diameter of the length of 20mm to 25mm. It has a volume of about 6.5cc. The eye is just like a camera. The external object is seen as the camera take the picture of any object. Light enters the eye through a small hole called the pupil, a black looking aperture having the quality of contraction of eye when exposed to bright light and is focused on the retina which is like a camera film.

The lens, iris, and cornea are nourished by clear fluid, know as anterior chamber. The fluid flows from ciliary body to the pupil and is absorbed through the channels in the angle of the anterior chamber. The delicate balance of aqueous production and absorption controls pressure within the eye.

Cones in eye number between 6 to 7 million which are highly sensitive to colors. Human visualizes the colored image in daylight due to these cones. The cone vision is also called as photopic or bright-light vision.

Rods in the eye are much larger between 75 to 150 million and are distributed over the retinal surface. Rods are not involved in the color vision and are sensitive to low levels of illumination.

Image Formation in the Eye:

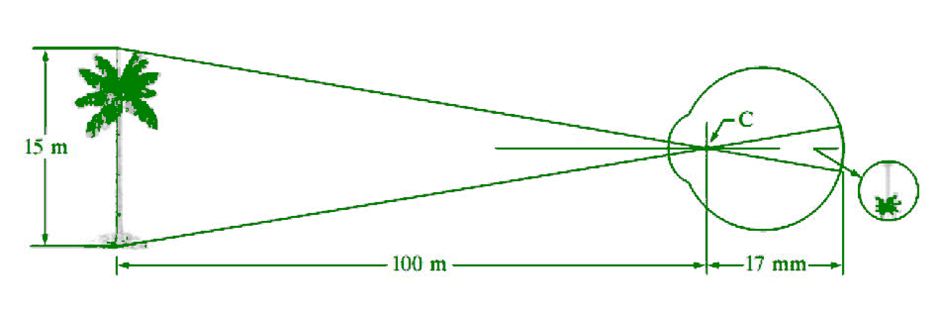

The principal difference between the lens of the eye and an ordinary optical lens is that the former is flexible. As illustrated in Fig, the radius of curvature of the anterior surface of the lens is greater than the radius of its posterior surface.

The shape of the lens is controlled by tension in the fibers of the ciliary body. To focus on distant objects, the controlling muscles cause the lens to be relatively flattened.

Similarly, these muscles allow the lens to become thicker in order to focus on objects near the eye. The distance between the center of the lens and the retina (called the focal length) varies from approximately 17 mm to about 14 mm, as the refractive power of the lens increases from its minimum to its maximum.

When the eye focuses on an object farther away than about 3 m, the lens exhibits its lowest refractive power. When the eye focuses on a nearby object, the lens is most strongly refractive.

This information makes it easy to calculate the size of the retinal image of any object , for example, the observer is looking at a tree 15 m high at a distance of 100 m.

If h is the height in mm of that object in the retinal image, the geometry of Fig.1.4 yields 15/100=h/17 or h=2.55mm. The retinal image is reflected primarily in the area of the fovea.

Perception then takes place by the relative excitation of light receptors, which transform radiant energy into electrical impulses that are ultimately decoded by the brain

Brightness Adaptation and Discrimination:

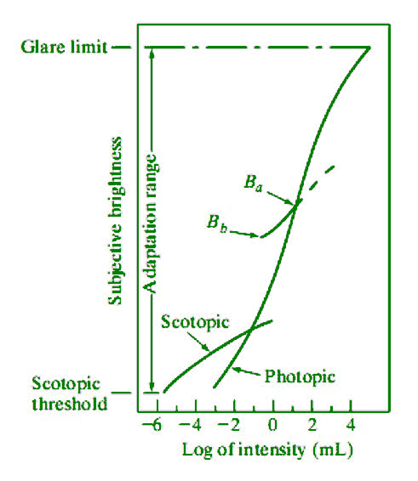

Digital images are displayed as a discrete set of intensities. The eyes ability to discriminate black and white at different intensity levels is an important consideration in presenting image processing result.

The range of light intensity levels to which the human visual system can adapt is enormous—on the order of 1010—from the scotopic threshold Experimental evidence indicates that subjective brightness (intensity as perceived by the human visual system) is a logarithmic function of the light intensity incident on the eye.

Figure 1.5, a plot of light intensity versus subjective brightness, illustrates this characteristic to the glare limit. The long solid curve represents the range of intensities to which the visual system can adapt. Inphotopic vision alone, the range is about 106.

The transition from scotopic to photopic vision is gradual over the approximate range from 0.001 to 0.1 millilambert (–3 to –1mL in the log scale), as the double branches of the adaptation curve in this range show. The essential point in interpreting the impressive dynamic range depicted is that the visual system cannot operate over such a range simultaneously.

Rather, it accomplishes this large variation by changes in its overall sensitivity, a phenomenon known as brightness adaptation. The total range of distinct intensity levels it can discriminate simultaneously is rather small when compared with the total adaptation range